On this article we’ll check out how you should utilize the Generalized Pre-trained Transformers v3 API (GPT-3) from OpenAI to generate textual content content material from a string immediate utilizing Python code.

When you’re aware of ChatGPT, the GPT-3 API works in an analogous method as that utility: you give it a bit of textual content and it provides you again a bit of textual content in response. It’s because ChatGPT is said to GPT-3, however presents its output in a chat utility as an alternative of by way of direct API calls.

When you ever thought it would be cool to combine transformer-based functions into your personal code, GPT-3 lets you try this by way of its Python API.

On this article we’ll discover tips on how to work with GPT-3 from Python code to generate content material from your personal prompts – and the way a lot that may price you.

Notice: This text is closely impressed by a part of Scott Showalter’s excellent talk on building a personal assistant at CodeMash 2023 and I owe him credit score for exhibiting me how easy it was to name the OpenAI API from Python

Why use GPT-3 when ChatGPT is Out there?

Earlier than we go deeper, I ought to state that GPT-3 is older that ChatGPT and is a precursor to that expertise. Given these items, a pure query that arises is “why would I take advantage of the previous approach of doing issues?”

The reply to that is pretty easy: ChatGPT is constructed as an interactive chat utility that immediately faces the person. GPT-3, then again, is a full API that may be given no matter prompts you need.

For instance, in case you wanted to shortly draft an E-Mail to a buyer for evaluation and revision, you would give GPT-3 a immediate of “Generate a well mannered response to this buyer query (buyer query right here) that provides them a excessive degree overview of the subject”

GPT-3 would then offer you again the direct textual content it generated and your assist group might make modifications to that textual content after which ship it on.

This manner, GPT-3 lets you save time writing responses, however nonetheless provides you editorial management to fact-check its outputs and keep away from the forms of confusion you may see from interactions with ChatGPT, for instance.

GPT-3 is beneficial for any circumstance the place you need to have the ability to generate textual content given a particular immediate after which evaluation it for potential use in a while.

Getting a GPT-3 API Key

Earlier than you should utilize GPT-3, you want to create an account and get an API key from OpenAI.

Creating an account is pretty easy and you might have even finished so already in case you’ve interacted with ChatGPT.

First, go to the Log in page and both log in utilizing your present account or Google or Microsoft accounts.

When you wouldn’t have an account but, you’ll be able to click on the Enroll hyperlink to register.

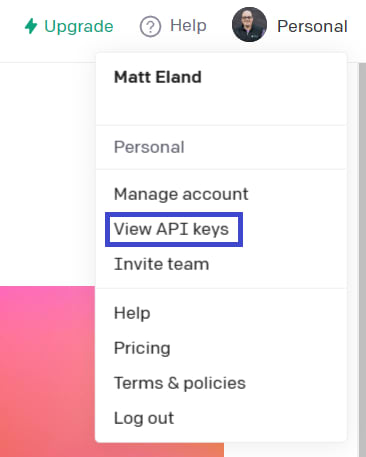

As soon as you have logged in, it is best to see a display like the next:

Whereas there are a variety of fascinating hyperlinks to documentation and examples, what we care about is getting an API key that we will use in Python code.

To get this API key, click on in your image and group identify within the higher proper after which choose View API Keys.

From right here, click on + Create new secret key to generate a brand new secret key. This key will solely be seen as soon as and also you won’t be able to recuperate it, so copy it down someplace protected.

After you have the API Key, it is time to transfer into Python code.

Importing OpenAI and Specifying your API Key

For the rest of this text, I will be supplying you with bits and items of code that may go right into a .py file.

When you’re following together with these steps, you may select to name this gpt3.py and use some version of PyCharm.

The very first thing we’ll must do is set up the OpenAI dependency. You can use PyCharm’s package deal supervisor pane to do that, however a extra common approach could be to make use of pip or just like set up the openai dependency as follows:

pip set up openai

As soon as that is full, add a pair of strains to import OpenAI and set the API Key to the one you bought earlier:

import openai as ai

ai.api_key = 'sk-somekeygoeshere' # exchange together with your key from earlier

This code ought to work, nevertheless it’s a very unhealthy observe to have a key immediately embedded into your file for quite a lot of causes:

Tying a file to a particular key reduces the pliability it’s important to use the identical code in several contexts in a while.

Placing entry tokens in code is a really unhealthy transfer in case you code ever leaves your group or even when it reaches a group or particular person who mustn’t have entry to that useful resource.

It’s a significantly unhealthy thought to push code to GitHub or different public code repositories the place it is likely to be noticed by others, together with automated bots that seek for keys in public code.

As an alternative, a greater observe could be to declare the API Key as an setting variable after which use os to get that key out by its identify:

import os

import openai as ai

# Get the important thing from an setting variable on the machine it's operating on

ai.api_key = os.environ.get("OPENAI_API_KEY")

After you have the important thing set into OpenAI, you are prepared to begin producing predictions.

Making a Operate to Generate a Prediction

Under I’ve a brief operate that makes a name out to OpenAI’s completion API to generate a collection of textual content tokens from a given immediate:

def generate_gpt3_response(user_text, print_output=False):

"""

Question OpenAI GPT-3 for the particular key and get again a response

:sort user_text: str the person's textual content to question for

:sort print_output: boolean whether or not or to not print the uncooked output JSON

"""

completions = ai.Completion.create(

engine='text-davinci-003', # Determines the standard, pace, and price.

temperature=0.5, # Stage of creativity within the response

immediate=user_text, # What the person typed in

max_tokens=100, # Most tokens within the immediate AND response

n=1, # The variety of completions to generate

cease=None, # An elective setting to manage response era

)

# Displaying the output might be useful if issues go fallacious

if print_output:

print(completions)

# Return the primary selection's textual content

return completions.selections[0].textual content

I’ve tried to make the code above pretty well-documented, however let’s summarize it briefly:

The code takes some user_text and passes it alongside to the openai object, together with a collection of parameters (which we’ll cowl shortly).

This code then calls to the OpenAI completions API and will get again a response that features an array of generated completions as completions.selections. In our case, this array will all the time have 1 completion, as a result of n is ready to 1.

temperature might sound uncommon, however it refers to simulated annealing, which is a metallurgy idea that’s utilized to machine studying to affect the training fee of an algorithm. A low temperature (close to 0) goes to present very well-defined solutions persistently whereas a better quantity (close to 1) can be extra inventive in its responses.

I need to spotlight the max_tokens parameter. Right here a token is a phrase or piece of punctuation that composes both the enter immediate (user_text) or the output.

Which means that in case you give GPT-3 a max_tokens of 10 and a immediate of “How are you?”, you have already used 4 of your max tokens and the response you get can be as much as 6 tokens lengthy at most.

Pricing and GPT-3

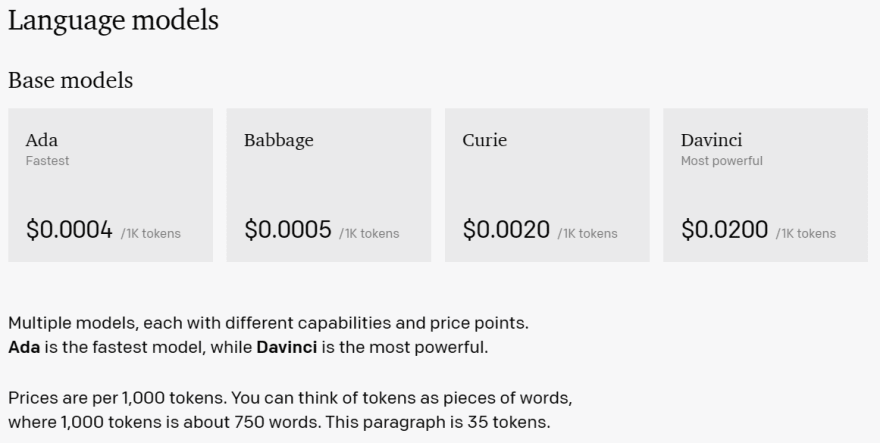

Subsequent, let’s spotlight the associated fee side of issues. With GPT-3, you pay based mostly on the amount of tokens you ship and obtain from the API and relying on the engine you select to make use of.

On the time of this writing, the pricing ranges from 4 hundredths of a cent and a pair of cents per thousand tokens that undergo GPT-3. Nonetheless, it is best to all the time have a look at the latest pricing information earlier than making selections.

You could have a couple of issues you are able to do to manage prices with GPT-3:

First, you’ll be able to restrict the max_tokens within the request to the API as we did above.

Secondly, you’ll be able to ensure you constrain n to 1 to solely generate a single draft of a response as an alternative of a number of separate drafts.

Third, you’ll be able to select to make use of a much less succesful / correct language mannequin at a less expensive fee. The code above used the DaVinci mannequin, however Curie, Babbage, and Ada are all sooner and cheaper than DaVinci.

Nonetheless, if the standard of your output is paramount, utilizing essentially the most succesful language mannequin is likely to be a very powerful issue for you.

You will get extra data on the accessible fashions utilizing the models API.

You too can checklist the IDs of all accessible fashions with the next Python code:

fashions = ai.Mannequin.checklist()

for mannequin in fashions.information:

print(mannequin.id)

After all, you may must seek for extra details about every mannequin that pursuits you, as this checklist will develop over time.

Producing Textual content from a Immediate

Lastly, let’s put all the items collectively right into a essential technique by calling our operate:

if __name__ == '__main__':

immediate = 'Inform me what a GPT-3 Mannequin is in a pleasant method'

response = generate_gpt3_response(immediate)

print(response)

This may present a particular immediate to our operate after which show the response within the console.

For me, this generated the next quote:

GPT-3 is a kind of synthetic intelligence mannequin that makes use of pure language processing to generate textual content. It’s skilled on an enormous dataset of textual content and can be utilized to generate natural-sounding responses to questions or prompts. It may be used to create tales, generate summaries, and even write code.

If operating this provides you points, I like to recommend you name generate_gpt3_response with print_output set to True. This may print the JSON obtained from OpenAI on to the console which might expose potential points.

Lastly, as a consequence of excessive site visitors into ChatGPT in the intervening time it’s possible you’ll sometimes obtain overloaded error response codes from the server. If this happens, attempt once more after awhile.

Closing Ideas

I discover the code to work with OpenAI’s transformer fashions could be very easy and easy.

Whereas I have been learning transformers for about half a yr now on the time of this writing, I feel all of us had a collective “Oh wow, how can we put this in our apps?” second when ChatGPT was unveiled.

The OpenAI API lets you work with transformer-based fashions like GPT-3 and others utilizing a really small quantity of Python code at a reasonably affordabl