In my earlier article, I’ve talked about Synchronous AWS Lambda & Amazon API Gateway limits and what to do about them.

As acknowledged within the weblog submit, the last word answer for a “huge payload” downside is making the structure asynchronous. Allow us to then zoom in on the facet of asynchronous communication within the Amazon API Gateway service context and construct a serverless structure based mostly on the notion of “pending work”.

Downside refresher

The next illustrates the Amazon API Gateway & AWS Lambda payload measurement downside I’ve touched about within the earlier article.

Irrespective of how exhausting you strive, you unable to synchronously ship greater than 6 MB of knowledge to your AWS Lambda operate. The restrict can critically mess along with your vital data-processing wants.

Fortunately there are methods to course of rather more knowledge through AWS Lambda utilizing asynchronous workflows.

The beginning line – pushing the information to the storage layer

Suppose you have been to ask the neighborhood concerning the potential answer to this downside. In that case, I wager that the most typical reply can be to make use of Amazon S3 as the information storage layer and make the most of presigned URLs to push the information to Amazon S3 storage.

Whereas very common, the movement of presigned URL to Amazon S3 may be nuanced, particularly since one can create the presigned URL in two methods.

Describing these would make this text a bit too lengthy for my liking, so I will defer you to this great article by my colleague Zac Charles which did the subject rather more justice than I may ever do.

I’ve the information in Amazon S3. Now what?

Earlier than processing the information, our system should know whether or not the consumer used the presigned URL to push the information to the storage layer. To my finest information, there are two choices we are able to pursue right here (please let me know if there are different methods to go about it, I am very eager to be taught!)

I omitted the AWS CloudTrail to Amazon EventBridge movement on function as I believe engineers ought to favor the direct integration with AWS EventBridge as an alternative.

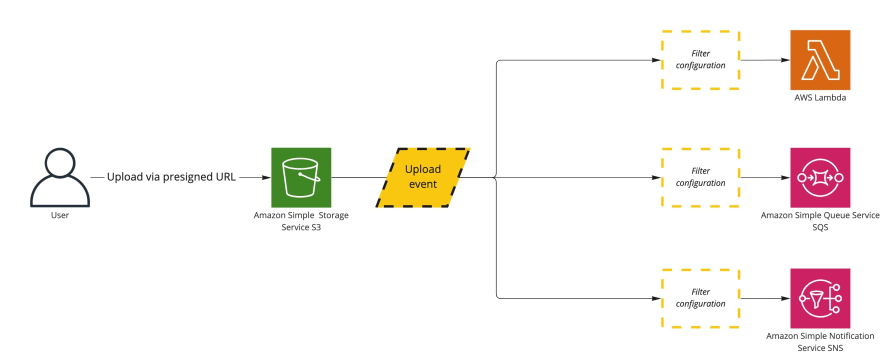

S3 Occasion Notifications

The Amazon S3 event notifications, until not too long ago, was a de facto manner of figuring out whether or not knowledge landed into Amazon S3.

Whereas ample for many use-cases, the characteristic is just not with out its issues, the most important of which, I might argue, are the misunderstandings round occasion filtering and IaC implementation.

Probably the most important factor to take into account with regards to occasion filtering is that you simply can’t use wildcards for prefix or suffix matching in your filtering guidelines. There are extra issues to contemplate, although. Should you plan to make use of the filtering characteristic of S3 occasion notifications, I strongly encourage you to learn this documentation page totally.

On the IaC aspect of issues, know that, in creating the AWS CloudFormation template, you may end up with circular dependency problems. Deployment frameworks like AWS CDK will make it possible for ought to by no means occur. Nevertheless, I nonetheless assume you ought to be conscious of this potential downside, even if you happen to use deployment frameworks.

EventBridge occasions

In late 2021, AWS introduced Amazon EventBridge support for S3 Event Notifications. The announcement had a heat welcome within the serverless neighborhood as EventBridge integration solves a lot of the “native” S3 occasion notifications issues.

Using Amazon EventBridge for S3 occasions offers you extra intensive integration floor space (you’ll be able to ahead the occasion to extra targets) and higher filtering capabilities (one of many sturdy factors of Amazon EventBridge). The combination is just not with out its issues, although.

For starters, the occasions are despatched to the “default” bus, and utilizing EventBridge is perhaps extra pricey for top occasion volumes. To be taught extra about completely different caveats, contemplate giving this great article a learn.

Munching on the information

You’ve gotten the information within the Amazon S3 storage, and you’ve got a method to notify your system about that truth. Now what? How may you course of the information and yield the outcome again to the consumer?

Given the character of AWS, for higher or worse, there are a number of situations one can transfer ahead. We are going to begin from the “easiest” structure and transfer our manner as much as deploying an orchestrator with shared, high-speed knowledge entry.

Processing with a devoted AWS Lambda

The AWS Lambda service is usually known as a “swiss knife” of serverless. Normally, processing the information in-memory inside the AWS Lambda is ample.

The one limitations are your creativeness and the AWS Lambda service timeout – the 15-minute most operate runtime. Please word that on this setup, your AWS Lambda operate should spend a few of that point downloading the article.

I am obscure about saving the outcomes of the carried out work on function as it is vitally use-case dependant. You may need to maintain the end result of your work again on Amazon S3 or add an entry to a database. As much as you.

However what if that 15-minute timeout limitation is a thorn at your aspect? What if the method inside the compute layer of the answer is advanced and would profit from splitting it into a number of chunks? If that’s the case, carry on studying, we’re going to be speaking about AWS Step Functions subsequent.

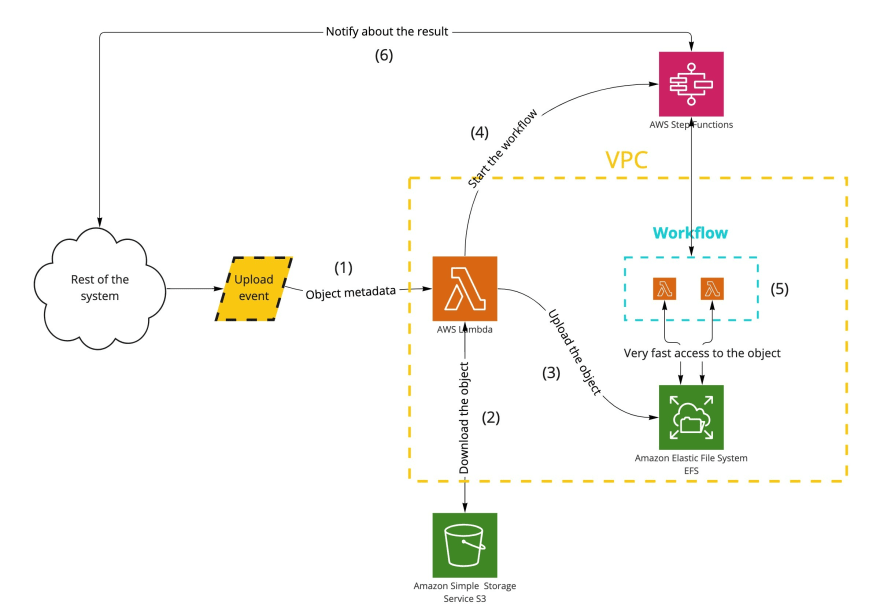

Processing with Amazon StepFunctions

If the compute course of inside the AWS Lambda we checked out beforehand is advanced or takes extra time than the exhausting timeout restrict, the AWS Step Capabilities is perhaps simply the service you want.

Take a word of the variety of instances the code inside your Step Perform definition must obtain the article from Amazon S3 storage (the illustration being an instance, in fact). Relying on the workload, that quantity might fluctuate, however irrespective of the workflow, after a sure quantity threshold, it does really feel “wasteful” for me to need to obtain the article many times.

Needless to say AWS Step Capabilities most payload size is 256KB. That’s the reason it’s a must to obtain the article repeatedly everytime you want entry to the article.

You would implement in-memory caching in your AWS Lambda function, however that method solely applies to a single AWS Lambda and is tied to its container lifecycle.

Relying on the necessities and constraints, I like to make use of Amazon EFS integration with AWS Lambda in such conditions. Amazon EFS permits me to have a storage layer shared by all AWS Lambda capabilities that partake within the workflow. Allow us to look into that subsequent.

Shared AWS Lambda storage

Earlier than beginning, perceive that utilizing Amazon EFS with AWS Lambda capabilities requires Amazon VPC. For some workflows, this truth doesn’t change something. For others, it does. I strongly advocate preserving an open thoughts (I do know some folks from serverless neighborhood despise VPCs) and evaluating the structure in accordance with your corporation wants.

This structure is, arguably, fairly advanced. You must contemplate some networking considerations. In case your object is sort of massive and downloading it takes a variety of time, I might advise you to look into this structure – VPCs don’t chew!

Suppose you yearn for a sensible instance, here is a sample architecture I’ve built for parsing media information. It makes use of Amazon EFS for quick entry to that video file throughout all AWS Lambda capabilities concerned within the AWS Step Perform workflow.

Yielding again to the consumer

With the compute half behind us, it’s time to see how we would notify the consumer that our system processed the article and that the outcomes can be found.

It doesn’t matter what form of answer you select to inform the consumer concerning the outcome, the state of the work needs to be saved someplace. Is the work pending? Is it completed? Perhaps an error occurred?

Preserving monitor of the work standing

The next is a diagram of an instance structure that retains monitor of the standing of the carried out work.

The database sends CDC occasions to the system. We may use these CDC occasions to inform the consumer concerning the work progress in real-time! (though which may not be vital, in most situations, polling for the outcomes by the consumer is ample).

In my humble opinion, the key:worth nature of the “work progress” knowledge makes the Amazon DynamoDB an ideal selection for the database part (nothing is stopping you from utilizing RDS, which additionally helps CDC occasions, which themselves are non-compulsory right here).

Notifying the consumer concerning the outcomes

The final piece of the puzzle is ensuring the consumer has a method to be notified (or retrieve) the standing of the request.

Often, in such conditions, now we have two choices to contemplate – both we implement an API during which the consumer goes to pool for the updates, or we push the standing on to the consumer.

The primary choice, pooling, would look much like the next diagram by way of structure.

This structure is ample as much as a selected scale. The structure should scale in accordance with the pollers hitting the API. The extra pollers actively interact with the API, the upper the prospect of throttling or different points (consult with the Thundering herd problem).

In greater throughput situations, one may need to substitute the polling habits with a push mannequin based mostly on WebSockets. For AWS-specific implementations, I might suggest Amazon API Gateway WebSocket support or AWS IoT Core WebSockets for broadcast-like use-cases.

I’ve written an article solely targeted on serverless WebSockets on AWS. Yow will discover the weblog submit right here.

Closing phrases

And that’s the finish of our journey. We’ve checked out the best way to get the massive payload into our system, course of it, and reply to the consumer. Implementing this structure is just not a small feat, and I hope you discovered the walkthrough useful.

For extra AWS serverless content material, contemplate following me on Twitter – @wm_matuszewski

Thanks in your valuable time.